The Research problem

Recent studies have demonstrated that there is demographic bias in the results yielded by Large Language Models (LLM) in tasks which provide support to decision-making based on a person’s language input. This happens in tasks where a model is asked to make decisions for candidate selection in job applications or to reach a judiciary verdict in court. Studies have shown how demographic characteristics which the model ascribes to a person based on the person’s language have an impact on the LLM result, yielding prejudice and discrimination. LLMs are more likely to discriminate against users that use stigmatised dialects (because of ethnicity, race and gender) and minority languages instead of major standard languages. This type of bias, which is detrimental to specific groups of users, particularly vulnerable ones, makes models unfair and promotes inequality. A further aspect of demographic bias is that LLMs in general tend to generate text with linguistic characteristics that are closer to those of major language varieties (for instance, standard English, standard Portuguese, standard Thai), which reduces diversity and fosters homogeneity. Studies have investigated forms of resolving bias in LLMs, either by intervening in the training process, through data filtering and human feedback training, or debiasing trained models and the datasets with which the models are trained. These methods have been reported to be effective in detecting overt or explicit bias. However, covert or implicit bias requires more sophisticated strategies and is an emergent field of research.

Research Design

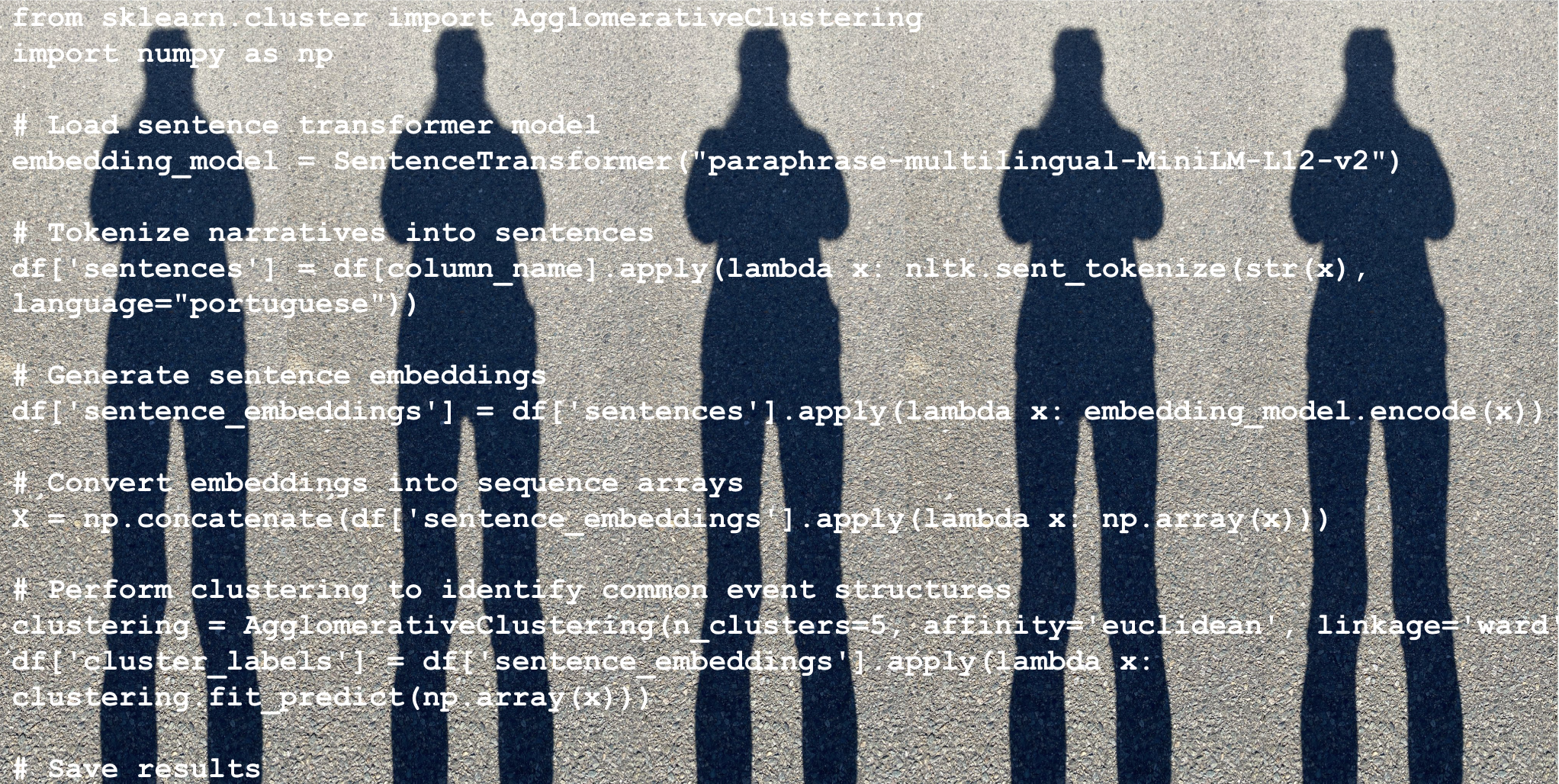

We propose to investigate LLM responses through methods that are closer to the real-world usage of LLM-powered applications using commands (prompts) for LLMs to generate responses in simulated scenarios when judgement is passed on a human being. These simulations will draw on language datasets specially designed to represent dialects and minor languages, which will be compiled, enriched and curated by human annotators. The tasks and scenarios that we will use are intended to trigger a response that may reveal dialect prejudice. Thus, we will investigate which attributes models covertly associate with different simulated profiles of users.

Project Objectives

We aim to develop and evaluate robust methods to assess LLM responses in tasks that require models to generate judgments based on a person’s language input. This is a newly emerging challenge: covert demographic bias in Large Language Models. The limited available studies remain in their early stages and still evolving. Such a challenge requires a collaborative approach, leveraging diverse expertise through a partnership that we have successfully established through WUN. Multiple cultural perspectives are crucial to solving this problem.